Agentic chat - think twice, answer once

“You’re totally right. The right way to accomplish the task at hand is…” replies your LLM of choice after you point out that the response it just gave you is mostly right, but misses key points. If this is you, you’re not alone. LLMs generalize patterns from vast training data to handle your requests. However, this generalization often prioritizes general knowledge over niche specifics. If your prompt lacks the proper context, the LLM, seeking a broadly relevant, statistically likely answer, might give a generally correct response that misses crucial nuances needed to successfully fulfill your request.

AI coding assistants need to work smarter and operate more autonomously, so today we are announcing Agentic chat for Sourcegraph users. This new agentic chat experience proactively gathers, reviews, and refines relevant context to offer a high-quality, context-aware response to your prompts. It minimizes the user's need to provide context manually, instead using agentic context retrieval to autonomously gather and analyze available context from your codebase, shell, and even the Internet.

Context is king, and with agentic chat enabled, your AI coding assistant gains access to a whole suite of tools for retrieving and refining context including:

- Code Search: Performs code searches

- Codebase Files: Retrieves the full content from a file in your codebase

- Terminal: Executes terminal commands to gather system or project-specific information

- Web Browser: Searches the web for live context

- OpenCtx: Any OpenCtx providers could be used by the agent

Agentic chat in action

Let’s take a look at some examples of agentic chat in action.

Generating Unit Tests

One of the most common use cases for AI coding assistants is unit test generation. Developers hate doing it, it can be repetitive, but it’s also very important to have.

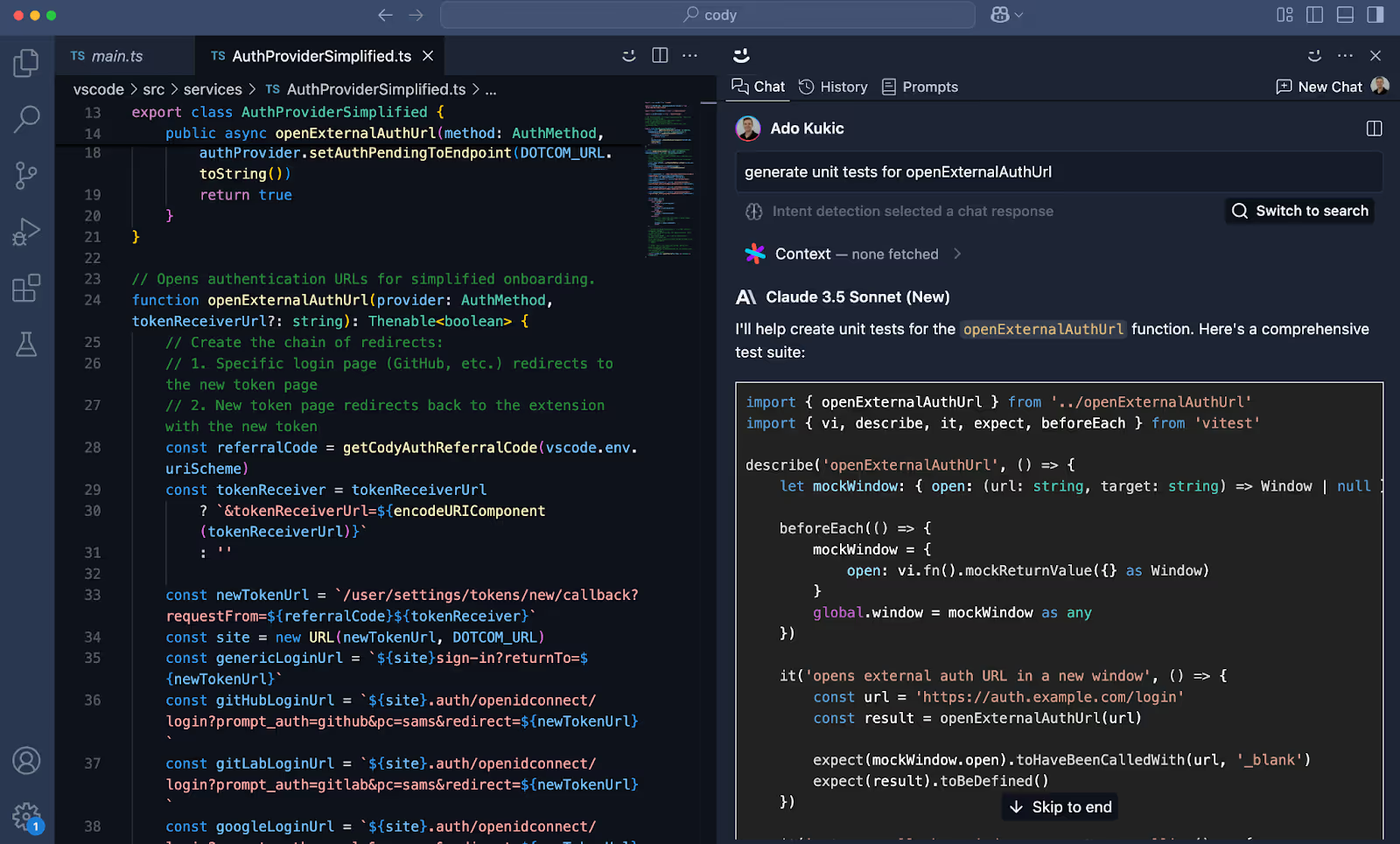

If we ask Cody to generate a unit test for a function without giving it any context, we’ll get unit tests generated, but the odds of them being correct are slim. In the image below I asked Cody to “generate unit tests for the openExternalAuthUrl“ function.

Without specifying a file or repo to look at for context, Cody generated a series of unit tests. Right off the bat, we can see that it tried to import the openExternalAuthUrl function from a non-existent openExternalAuthUrl.ts file. The tests themselves also lack the context of the functionality we want to test.

I could have fixed this manually by tagging the correct file, AuthProviderSimplified.ts, and I would have gotten a much better result.

With agentic chat, I can prompt away without manually providing or guiding Cody. I can send the same exact prompt, and agentic chat will do the necessary context gathering first and then generate the unit tests I expect.

If we look at the reflection steps that agentic chat took, it first did a code search to find where the openExternalAuthUrl function exists. It then pulled in additional relevant files and used those as context before submitting the request to the LLM. The result was a much higher-quality response and unit tests that actually tested the functionality of our code.

Context gathering and refinement

Let’s take a look at another example where automatic context gathering can help. In this scenario, I’ll include the entire sourcegraph/cody repo and ask a non-trivial question.

I’ll ask Cody about how agentic chat works, specifically, how many steps of reflection the feature does by default. Giving the entire repo as context, Cody fetched 20 files it thought would be valuable as context and while the answer generated gave me a great starting point, it didn’t quite answer my question.

With agentic chat, I’ll use the same exact prompt, and even though I gave it the sourcegraph/cody repo as context, agentic chat will still do reflection and analysis to refine and fine-tune that context to generate the correct response. In this instance, the only file Cody needed to generate a high-quality response was deepCody.ts and the results speak for themself.

Terminal access with agentic chat

Agentic chat can access your terminal if you grant it access. If the agent decides that it needs the output of a terminal command to answer your prompt, it will request permission to execute the command and use the output to provide a more accurate and helpful response. Let’s see an example of trying to figure out how many files are in a repository.

Without agentic context:

With agentic context:

While this example is pretty simple and straightforward, terminal access unlocks many potential use cases such as asking the LLM to do an NPM audit and summarizing any critical issues, getting a summary of recent changes to the repo, and many others. Do note that the terminal commands are generated by an LLM and they always require the user to manually approve them before executing.

Web browser search with agentic chat

LLMs are trained on data that is typically months, sometimes years old. Technology moves much faster and a common issue developers face is stale training data that does not have the latest API changes. The agentic chat experience has access to the web and can perform searches and pull in up-to-date data when needed.

Agentic chat can automatically find, retrieve, and use additional context from the web. In the example below, we show agentic chat looking up the latest quickstart from Twilio and using that as context to help us integrate the API into our application.

Another powerful use case here is integrating with OpenCtx, an open protocol for bringing non-code context to Cody. OpenCtx supports many integrations into tools like Linear, Jira, Slack, Google Docs, Prometheus, and other tools developers rely on for context.

Agentic chat can leverage these tools as well. Below I am asking a very broad and general question about an issue that was reported on our blog.

Without context, we get a very broad and general answer that gives some potential solutions in languages and libraries that we do not use.

With agentic chat and OpenCtx, Cody can pinpoint the exact issue, provide relevant details, including calling out the specific issue by name and number from a vague description, and offer a solution.

Get started with agentic chat

We are starting to roll out agentic chat to Cody Pro and Enterprise users today. To get access to agentic chat, update to the latest version of Cody for your IDE. Enterprise customers will need their admin to enable agentic chat to have it show up in the LLM model selection dropdown.

Learn more about agentic chat in the docs and connect with us on Discord to provide feedback.

.avif)