Cody supports additional context through Anthropic's Model Context Protocol

Today marks a significant milestone in AI-assisted development: Anthropic has released Model Context Protocol (MCP), an open standard for connecting AI models with external data. We're proud to announce that Sourcegraph is one of the first tools to support it. This integration opens up new possiblities to get extra context into your editor.

For example, you can now get GitHub or Linear issues, connect to your Postgres database, and access internal documentation without leaving your IDE.

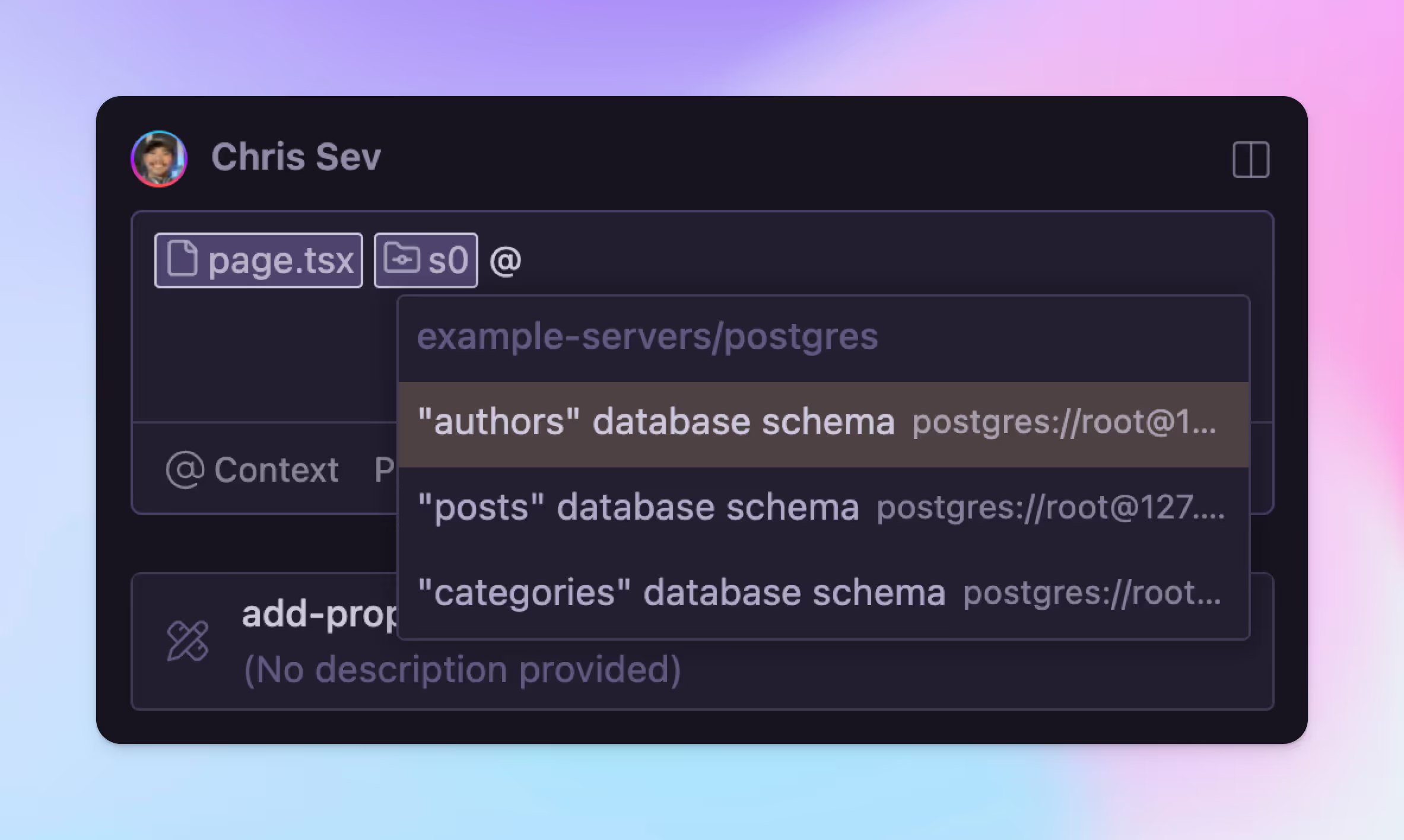

Here's an example of Cody connecting to a Postgres database to write a Prisma query after looking at the database schema:

What is Model Context Protocol?

MCP is Anthropic's new protocol that enables users to provide additional context to LLM-powered applications like Claude.ai. Think of it as a standardized way to feed external information into AI models, making them more aware of your specific use case and environment.

To get started with MCP, you would create an MCP server that connects to the data sources you want to use. Then you would create an MCP client that would connect to your server. The client could be Claude.ai. connect Cody and your editor to your MCP server via OpenCtx.

As launch partners with Anthropic, we've ensured that Cody can seamlessly integrate with MCP, bringing this additional context right into your editor where you need it most.

What can you bring into Cody using MCP?

Anthropic has released several example MCP servers that show how we can create servers to connect to various data sources. Cody supports all of these example servers out of the box. Cody can also support your own MCP server, which we'll cover later.

- Brave Search - Search the Brave search API

- Postgres - Connect to your Postgres databases to query schema information and write optimized SQL

- Filesystem - Access files on your local machine

- Everything - A demo server showing MCP capabilities

- Google Drive - Search and access your Google Drive documents

- Google Maps - Get directions and information about places

- Memo - Access your Memo notes

- Git - Get git history and commit information

- Puppeteer - Control headless Chrome for web automation

- SQLite - Query SQLite databases

The beauty of MCP lies in its universality. Once you build an MCP server, it becomes a source of context for multiple tools - not just Cody. Here's how it works:

- Your MCP server provides structured context through a standardized protocol.

- Cody connects to this server through OpenCtx, our open standard for external context.

- The context becomes available in your editor through Cody chat.

Trying out the MCP to Cody integration

To get started with MCP, let's clone Anthropic's example servers and try them out. Servers are stored locally on your computer and the Cody client will connect to them.

- Clone the example-servers repository

- Install the dependencies:

npm install - Build the project:

npm run build

This will create a build directory with the compiled server code. We will be pointing Cody to the servers in this directory.

Add the following to your VS Code JSON settings:

Notice that we added a mcp.provider.args field that contains the connection string for the Postgres database. Now we are able to connect to the database and use it in our editor.

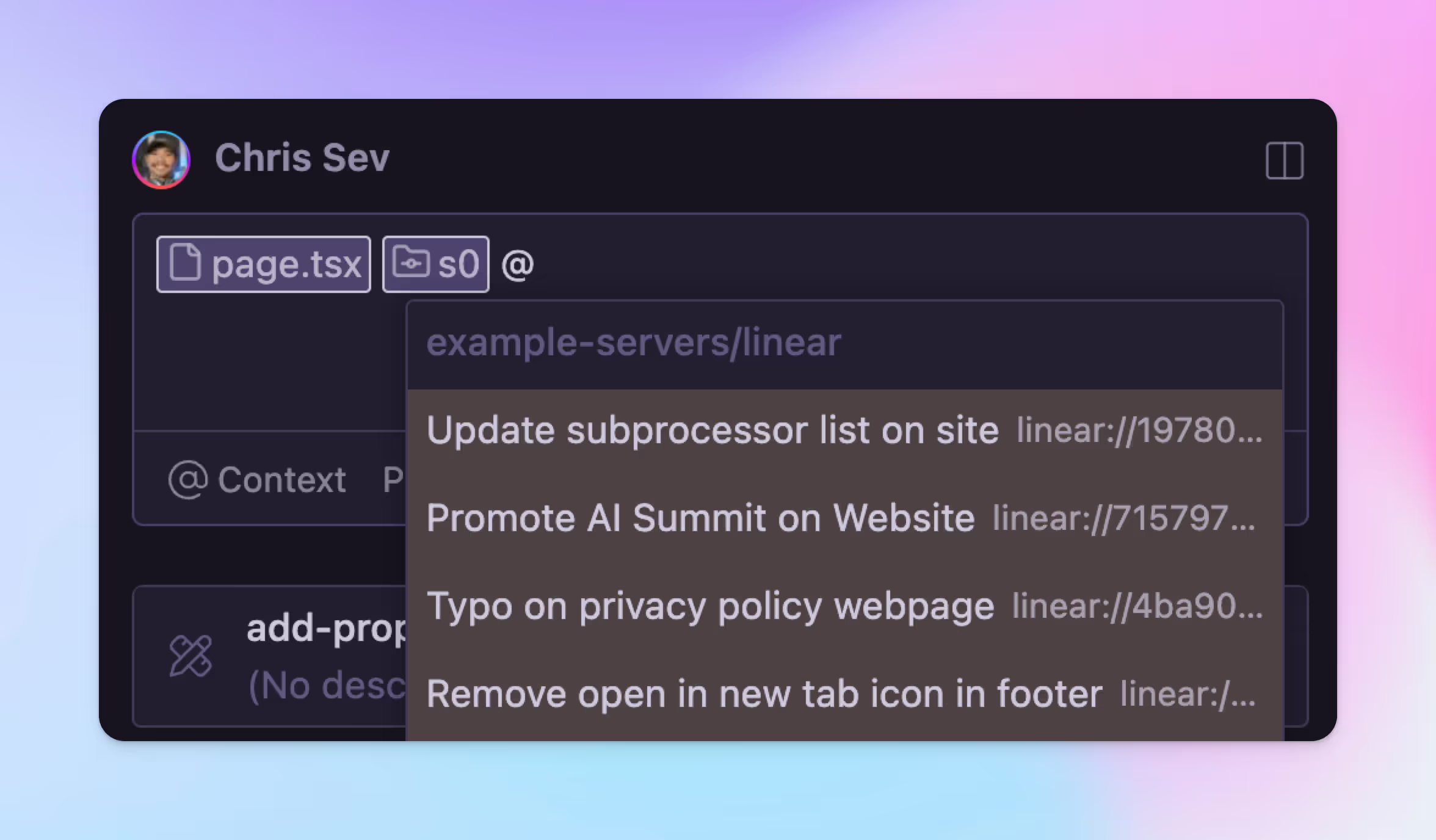

Building a Linear MCP integration

Want to create your own MCP server? Here's a quick tutorial to get you started. The Model Context Protocol team has created a Python SDK and a TypeScript SDK that make it easy to get started building your own context server.

Here's an example of a Node.js server using the Typescript SDK that will access Linear issues. We will build this in the example-servers folder that you cloned earlier. In addition to that folder, you will need:

- A Linear API key

- Cody installed in your editor

- A new file to create your MCP server:

example-servers/src/linear/index.ts

Here is the full code to create an MCP server that will access Linear issues:

You'll notice that this server implements two methods: ListResourcesRequestSchema and ReadResourceRequestSchema. The list resources method returns a list of resources that the server can provide context for. The read resource method returns the contents of a given resource.

Run the build command again to compile your new server to generate an index.js file that we can point Cody to: npm run build

Now that you have this new index.js file for your MCP server, connect it to Cody using OpenCtx. Add this to your VS Code JSON settings:

Make sure to add your Linear API key to the mcp.provider.args field. Once you've added the settings, you can see the Linear context provider in the list of available providers in Cody.

What's next?

The combination of Anthropic's Model Context Protocol and Cody opens up endless possibilities for enhancing your development environment with relevant context. Whether you're building internal tools, accessing documentation, or connecting to external services, MCP provides a standardized way to bring that information right into your editor.

We're excited to see what the developer community builds with this integration. Have ideas for MCP servers? Share them with us! The future of context-aware coding is here, and it's more accessible than ever.

Ready to try it out? Install Cody and check out Anthropic's example MCP servers to get started. Happy coding!

.avif)