Building DataBot: Our Always-On Data Assistant

"Hey qq, do we have data on how many users performed a Deep Search today?"

"Are we collecting telemetry for MCP calls, and does it include latency information?"

"What version is this customer on?"

Answering questions like these is a core part of working with data and enabling a company to be data-driven. But the cost adds up quickly. Each "quick question" disrupts deep work, forcing a context switch and taking time away from it.

What if there were a reliable, fast, and secure way to answer these questions, without having to ping a human every time?

At Sourcegraph, we built DataBot. An AI-powered Slack bot that has:

- Full custom data warehouse context (tables, schemas, business logic)

- Access to the right tools to find answers (BigQuery, PostHog, Looker, etc.)

- Deep Search to follow data transformations straight through the code and understand them

The Data Shepherd Problem

Being the "data person" at a company often means you become the human router between every data question and answer. Someone in #sales wants data on how a new customer is onboarding. Someone in #product wants to know adoption numbers. Someone in #engineering wants to know which telemetry events fire when a user clicks a button.

Each question may only take 5-10 minutes… but they rarely come in at the same time, and multiply that by ~10 questions a day, and you’re looking at some serious time of context-switching that could've been spent elsewhere.

DataBot doesn’t replace data analysts. It becomes an intermediate step before involving a data person in the loop. Similar to "did you Google this before asking me?", it now becomes "did you ask DataBot?"

Here’s a breakdown of how we made DataBot good at its job.

Tools, Tools, Tools

Equipping DataBot with the right tools is essential. A bare LLM with no tools is just a chatbot that hallucinates table names and creates confusion instead of solving real problems.

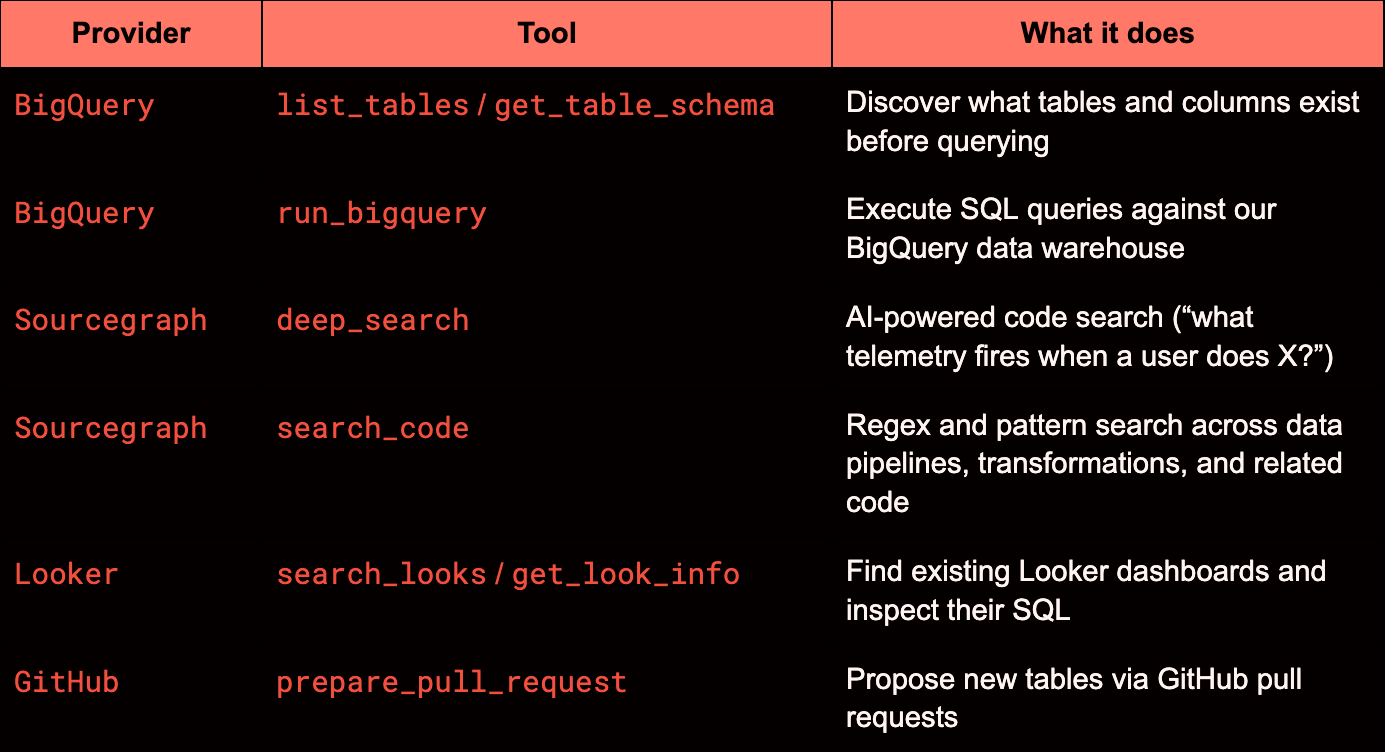

To be useful, DataBot needs to safely explore your data ecosystem: tables, pipelines, Git commits, dashboards, and more, before it ever attempts to answer a question.

Here are some of the core tools DataBot uses:

Our analytics are primarily powered by telemetry emitted from source code. Deep Search plays a critical role, letting us analyze source code, track telemetry events across product flows, and pinpoint which events have changed recently. For our data team, it’s usually the first tool we reach for, long before writing a single SQL query. DataBot usually follows the same path.

But it doesn’t stop at one tool. The magic is in tool composition. DataBot might use deep_search to discover relevant events, get_table_schema to understand the table structure, and run_bigquery to compute the final numbers. The tools work in harmony and usually follow the path a data analyst would take to answer questions.

As a reminder, access should always be scoped to read-only to prevent any destructive operations.

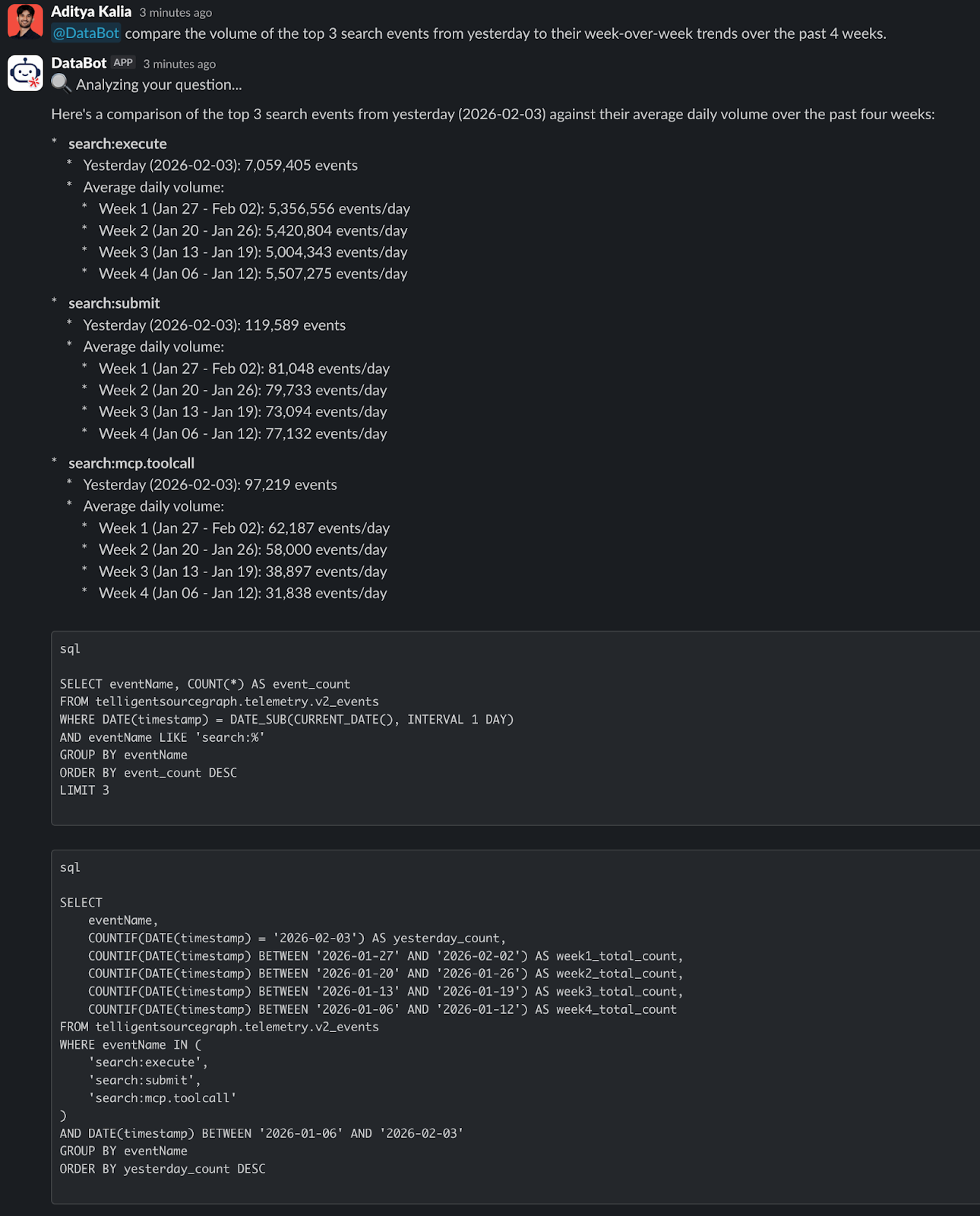

Show Me the $QL

Every answer from DataBot includes the underlying SQL. Data people are (rightfully) skeptical. It’s how we make sure teammates are actually getting the right answers.

When DataBot says "These are the trends for your top 3 search events" showing the SQL lets you quickly verify:

- Did it use the right table?

- Did it filter for the feature correctly?

- Is it using the correct date ranges to compare week-over-week changes?

This made rollout significantly easier, too. No separate QA process was needed; every answer in Slack was self-documenting. Spot a bad join, tweak the prompt/context, and watch the next answer improve. Feedback was instant: the more teammates used DataBot, the more improvements we could make to its context.

Building on Previous Answers

Conversations typically don't end with one question; they’re a dialogue. "How many users last week?" leads to "What about the week before?" leads to "Break it down by deployment type."

DataBot keeps the full thread context to handle this seamlessly. It remembers what you asked, which tables it queried, and the assumptions it made along the way. Then it builds on previous answers, so follow-ups feel connected. No having to re-explain, no starting over, just a natural conversation about data.

Internally, we’ve found that most interactions with DataBot involve multiple follow-ups. A good sign of real dialogue, especially for nuanced or open-ended questions.

Maintaining thread context is one thing. Understanding the data itself is another. Which brings us to the next piece of the puzzle: context. The better DataBot understands your tables, pipelines, and business logic, the smarter and more accurate its answers become.

Context is Key

Agents thrive on context, especially when working with data; context is everything. We’ve all run into:

- Tables with cryptic names: events,

v2_eventsvsevents_legacy - Columns that look similar but mean different things

user_idvsunique_user_idvsuid - Business logic buried in tribal knowledge (“for DAU, always filter on

eventType.DAU = TRUE)

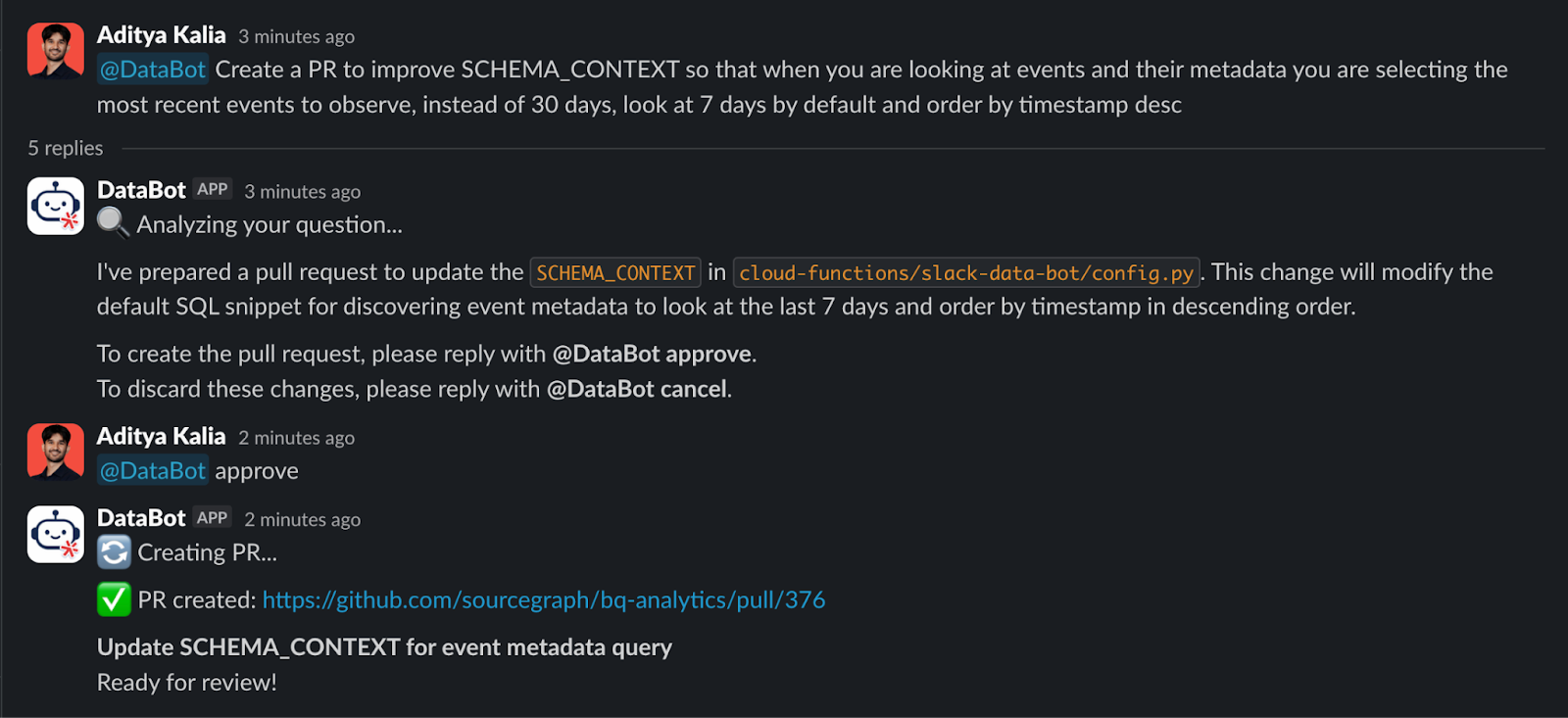

We solve this with SCHEMA_CONTEXT. A structured prompt that teaches DataBot about our data warehouse. Think of it like onboarding a new teammate. We walk DataBot through the ins and outs of our warehouse. What tables to use for which type of question, where our dev tables live, how our internal definitions work, and the lessons we’ve learned from working with the data.

Example of SCHEMA_CONTEXT rules:

**User counts:** Always use COUNT(DISTINCT uniqueUserId) for user counts. For active users, filter with eventType.DAU = TRUE.

**URL matching:** Always use LIKE with wildcards for URL fields. Never add https:// - data often stores URLs without protocol.

**Website/marketing:** (pageviews, UTM, funnels) → PostHog

Every time DataBot makes a mistake or gets something wrong, we add a rule. Over time, the prompt evolves as our team’s knowledge grows.

SCHEMA_CONTEXT is tailored to us at Sourcegraph and captures the core knowledge of our data warehouse: how it’s structured, how it’s used, and the rules that matter. It naturally evolves as our data changes and grows. We also see it behave differently across models, which makes ongoing fine-tuning essential. In short, investing the time to get this right pays dividends in the long term. Same DataBot, different flavors.

How We Deployed It

Here’s how we got DataBot up and running, and the stack that makes it all work:

Core Stack

- Google Cloud Functions: Receives Slack events, orchestrates tool calls, and executes queries.

- Gemini 2.5 Flash: Fast, cost-effective, and great at tool usage.

- Slack App: Subscribes to

@mentionsand handles thread context.

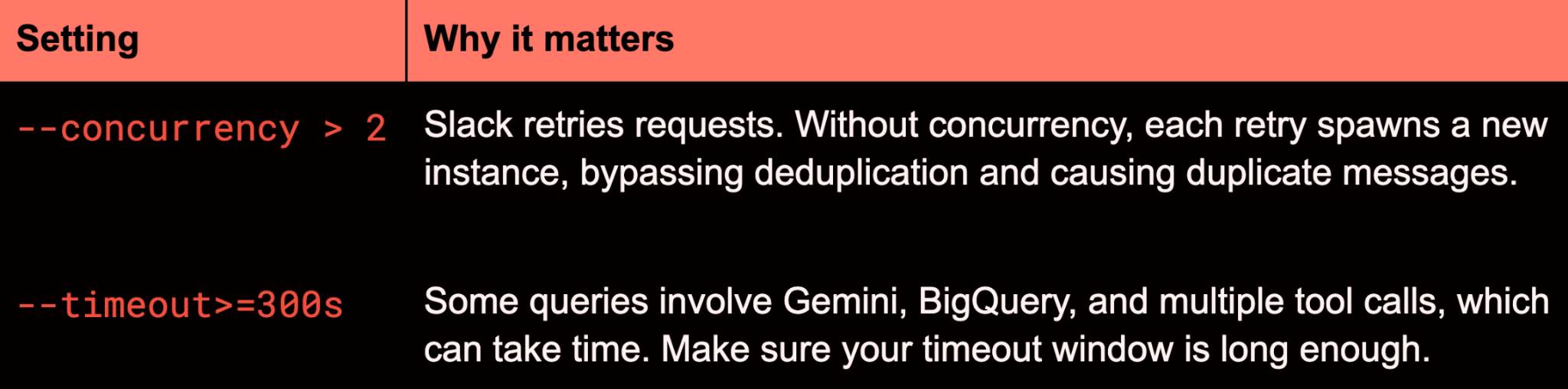

Key Settings That Matter

I personally love this deployment because updates are so fast! For example, pushing a fix to SCHEMA_CONTEXT for improved business logic happens within minutes. That keeps DataBot up to date and ready to answer the next question better.

The Shift

Before DataBot, answering data questions meant stopping what you were doing, writing a query, testing it, pasting results into Slack, and context-switching back. It was slow, had to happen within working hours, and took time that could have been spent elsewhere.

Now, we review DataBot’s answers the way we review PRs: glance at the SQL, confirm the logic, and move on. The work has shifted from doing to auditing—and auditing scales in a way that answers every question you never could. What used to take 5 to 10 minutes per question now takes maybe 30 seconds to audit.

The best part? DataBot only gets smarter. As models improve, tools evolve, and your context grows, every version becomes smarter and more capable.

We’re only just beginning to see what’s possible.

About the Author

Aditya is the Lead Data Engineer at Sourcegraph, with over six years of experience wrangling data. He’s gone from hand-crafting ETL pipelines to leveraging SaaS tools like Fivetran, and now works with AI agents capable of one-shotting entire data pipelines. He writes about the future of data engineering: a world where agents handle the tedious, nitty-gritty data work, freeing engineers to tackle bigger and bolder challenges.

A special thanks to Justin Dorfman and Robert Lin for their contributions to this blog post.

.avif)