Beyond working set memory: understanding the cAdvisor memory metrics

At the heart of Sourcegraph’s fast, scalable code search is the Zoekt search engine. As an engineer here at Sourcegraph, I’ve made it a mission to understand and improve the resource usage of Zoekt to help it scale to massive codebases. As a first step, I tracked down some subtle memory leaks that had been plaguing our memory dashboards with concerning spikes. After rolling out the changes to Sourcegraph’s public code search, I was satisfied to see the spikes disappear. Later, I made a big round of memory improvements focused on the Go application that powers search indexing. I again monitored our dashboards while rolling out these fixes, eager to see some of the remaining spikes disappear.

But… the memory usage looked exactly the same! From local benchmarks and from GCP profiles, I knew these changes should significantly decrease the peak Go heap usage. What on earth was going on?

It turned out that I had totally the wrong impression of what our main memory metric container_memory_working_set_bytes represents. As a code search enthusiast, I began to search and dig into the cAdvisor source to understand how these metrics are actually implemented. We ended up “refactoring” our memory dashboards to incorporate other key metrics, and now have better insight into how our services actually use memory.

A common mistake

The cAdvisor docs refer to container_memory_working_set_bytes as the “current working set” of the container. Several sources recommend this as the best memory metric to track, emphasizing that it’s “what the OOM killer is watching for”. We were careful not to use the temptingly-named container_memory_usage_bytes, which includes “all memory regardless of when it was accessed” and doesn’t represent the memory truly “needed” by the application. At first glance, it really seems like the working set metric should correlate closely with the heap and stack usage of our Go processes.

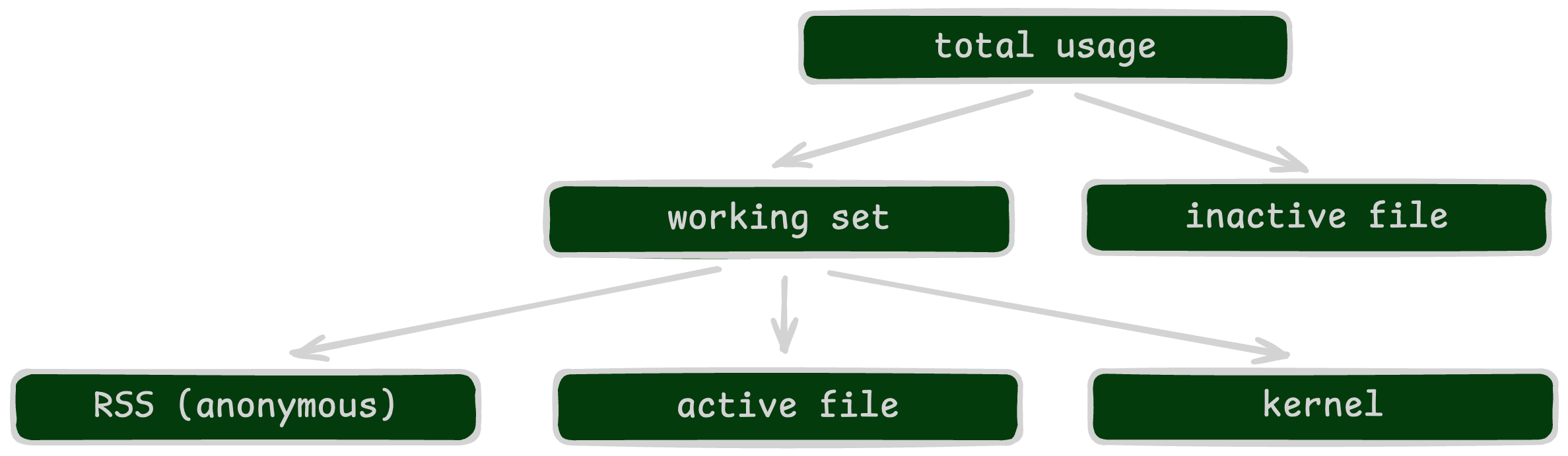

According to its definition, however, this metric includes not only anonymous memory (like stack and heap), but recently accessed file pages, as well as kernel memory. Digging further into how it’s implemented, we see it’s just the total memory usage (container_memory_usage_bytes) minus “inactive file” memory, which are pages in the filesystem cache that don’t appear to be in active use. So this is a loose estimate for the memory the application is currently using.

A key insight here is that “actively in use” is not the same thing as “unreclaimable”. When a container is under memory pressure, the OS will attempt to reclaim memory even if it’s been used recently, like evicting pages from the filesystem cache. We can see this from looking at the Linux working set implementation, which documents how pages can be moved from “active” to “inactive” state when there’s not enough room for the active pages.

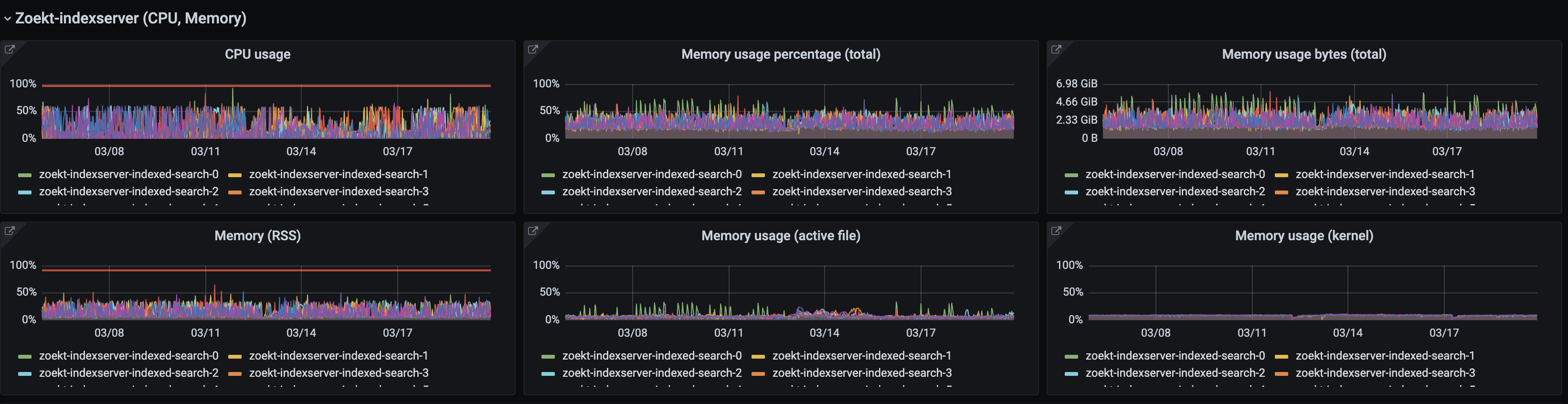

So there wasn’t a good reason to believe container_memory_working_set_bytes would track Go heap usage closely, or that a high working set would trigger the OOM killer. Zoekt’s indexing process constantly reads and writes to disk, so naturally it uses the filesystem cache, which can significantly add to the total working set value [1].

Refactoring the dashboards

This realization made it clear we needed to track other key memory metrics. After looking through candidate metrics, and tracing them down to the OS-level definition in the memory.stat docs, we decided to break down working set usage into three component parts:

- container_memory_rss: the total anonymous memory in use by the application, which includes Go stack and heap. This memory is non-reclaimable, and is often a close proxy for what the “OOM killer” looks at. We’ve found this metric to be indispensable.

- container_memory_total_active_file_ bytes: filesystem cache memory that the OS considers "active". This memory can usually still be recalimed under memory pressure. For file memory to be considered for the "active" list, it just needs to be accessed more than once.

- container_memory_kernel_usage: the total kernel memory in use, some of which is reclaimable under memory pressure [2]. We’ve had some adventures with kernel memory, but that will be left for a future blog post!

Don’t worry, we didn’t delete our old friend container_memory_working_set_bytes. This metric is still very useful for estimating how much memory the container is putting to good use. If the working set is nearing 100% of what’s available, that might suggest the application would benefit from more memory, and that we’re currently leaving some performance “on the table” by not allocating more memory to the container.

We’ve now refactored our memory dashboards to report these complementary metrics too (and carefully documented their interpretation and intended use). Here’s an example from our busy but happy Zoekt index servers that power Sourcegraph’s public code search. In the new dashboard, we refer to container_memory_working_set_bytes as “Memory usage (total)”.

The impact of mmap

These new dashboards proved insightful for our other services, like Zoekt’s search service. This service executes user searches by loading Zoekt’s index files from disk and consulting the index structures. Like many other search engines, Zoekt uses memory mapping (mmap) to access this index data. Memory mapping is an operating system capability that lets a process read from a file as if it were reading from a contiguous range of memory. When the process reads from some new part of that memory, the OS makes sure to read the corresponding page on disk and store it in filesystem cache. This strategy has a major benefit: the index structures can be read directly from the index files, avoiding the need to hold large amounts of data on-heap. At the same time, memory mapping ensures that recently accessed data is cached in memory (through the filesystem cache), helping maintain good search latency [3].

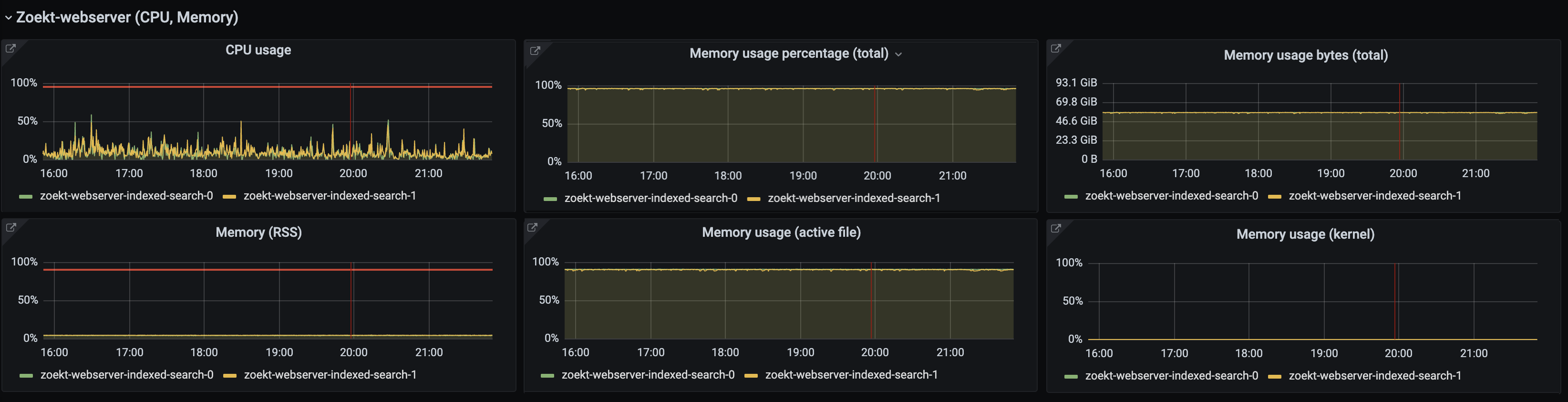

So when performing a search, Zoekt’s search service queries the memory mapped index files directly, with only very little data pulled into the Go process heap. Our new memory dashboards show this usage pattern clearly — here’s an example from an internal instance with only two search servers:

The dashboard shows low container_memory_rss, which fits with our expectation around the service using little heap. On the other hand, container_memory_total_active_file_bytes is high due to the memory mapped index files in filesystem cache. As a result, container_memory_working_set_bytes is near 100% of available memory. But the service is operating fine and not experiencing out-of-memory errors. If more memory is requested than available, the OS evicts some of the plentiful filesystem cache memory.

We mentioned earlier that when container_memory_working_set_bytes is near 100% of available memory, this could be a sign that the application could usefully consume more memory, and that some performance may be “left on the table”. For the Zoekt instance in the dashboard, it’s natural to wonder if the filesystem cache is too small. Say you are searching a repository in Sourcegraph that’s not accessed frequently, and whose data is not currently in filesystem cache. When Zoekt searches this repository, the OS must read its index data from disk, potentially adding significant latency.

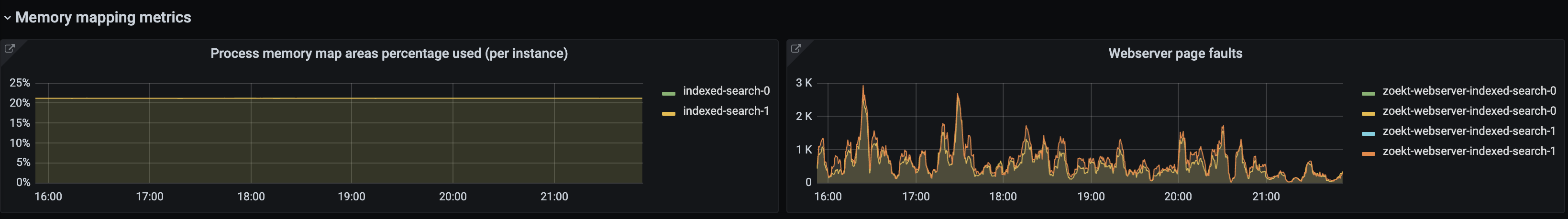

To help determine if this is the case, we also track the number of page faults through container_memory_failures_ total{fauilure_ type=pgmajfault}. A page fault occurs when a process requests a page of memory that is not in physical memory, and the OS must load the page from disk.

For this internal instance, we see a high rate of major page faults compared to the number of search operations, indicating that many searches are hitting disk. It’s worth increasing the available memory on the container so that more of the index comfortably fits in filesystem cache.

Source of truth

In investigating these memory metrics, I found it invaluable to consult the cAdvisor and Linux source code. Documentation and blogs (and LLMs!) are critical resources, but if something isn’t quite lining up, there’s no substitute for reading the real source of truth. As one of my coworkers says, “the most fun part of engineering is using your brain”. Happy reading and happy hacking!

Footnotes

- I am far from the first engineer to notice this issue. The article From RSS to WSS: Navigating the Depths of Kubernetes Memory Metrics is a fantastic resource on these metrics and how they fit together. There are also insightful discussions in the cAdvisor repo itself with proposals on how to refine these memory metrics.

- This reference from Oracle gives some background on kernel memory statistics, including reclaimable and unreclaimable slab memory.

- Memory mapping is a common memory management strategy for search engines and some databases, as it avoids the need to explicitly implement a buffer pool of index data. Apache Lucene, which powers the Elasticsearch and Solr search engines, makes extensive use of mmap. However, the recent paper Are You Sure You Want to Use MMAP in Your Database Management System? raised potential downsides of this approach, showing poor benchmark results for workloads like sequential scans. The author of RavenDB responded with a thoughtful rebuttal based on their experiences implementing a storage engine.

.avif)